Personally I would like to call Evolution a "probabilistic mathematical function" which can be statistically shown to exist by 14 billion years of development of ever greater complex patterns, and eventually resulting in the advent of self replicating polymers. Mutations in this process (random assimilation of compatible chemicals) , may have resulted in a survival advantage and the beginning of competition to dominate, e.g. Darwinian Evolution .

This does not necessarily mean that simpler structures cease to exist altogether, they may well continue to exist, but will take a different path of evolutionary development.

A great example IMO, is the fusion of chromosome 2 in humans, which marked the split from our hominid precursors.

http://www.evolutionpages.com/chromosome_2.htm

If it were not of man's interference by destruction of habitat and hunting for "bushmeat", the great apes would be thriving instead of declining. The same holds true for whales and coral reefs which provide a rich environment for a great variety of fish and other life forms.

https://aquaworld.com.mx/en/how-are-coral-reefs-formed/

"mess with Mother Nature and she will exact a price for destabilizing local and/or global ecosystems"

We cannot regulate global functions, only disturb the symmetries and balance which took earth some 4 billion years to establish by self-organization of the ecosphere due to earth's inherent potentials derived from chemical reactions and our stable proximity to the sun.

The rare probabilistic events were due to "outside" interferences, such as collision with Theia

https://en.wikipedia.org/wiki/Theia_(planet)

Which may have introduced several elements (such as gold) into the earth's chemistry and be causal to the eventual emergence of self-replicating polymers, which continued to organize into more complex biochemical structures over the following millions of years.

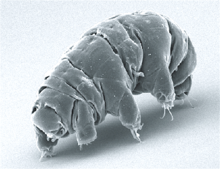

This is why I see creation of life as a probabilistic, but statistically demonstrable process of trial and error over some 2 trillion, quadrillion, quadrillion, quadrillion chemical interactions on earth alone (Hazen), in spite of the early chaotic state of the earth's atmosphere, which created the great extinction epochs, where only the hardiest or sheltered organisms survived and when conditions settled, continued to populate the earth.

I see this as a "fundamental" process, and as Hazen proposed, there may have been other ways to form self duplicating biomolecules, but they all must have had something like it in common.